BearCubs

A small but mighty benchmark for computer-using web agents

🐻 BearCubs 🐻 evaluates the capability of web agents to search, browse, and extract factual information from the live web through complex and diverse text-based and multimodal interactions. For more details, check out our paper! ✨

About the benchmark: BearCubs comprises 111 carefully crafted questions covering a wide range of topics, including but not limited to music, maps, videos, games, and virtual tours. Each question is designed to be adversarial to closed-book LLMs and simple Google searches. Answers are concise and uniquely formulated to eliminate ambiguity and paraphrasing. Additionally, all questions can be answered without accessing content behind paywalls or login restrictions.

Data updates: We continuously validate existing questions and answers while introducing new, more challenging ones. Check the bottom of the webpage for the latest update date. If you're interested in pushing the boundaries of state-of-the-art agents, consider contributing to the BearCubs dataset! 🚀

In the table below, a CU agent is an agent with computer use capabilities that can perform interactive browsing by processing pixels on the screen and controlling a virtual keyboard and mouse.

| Accuracy | ||||

|---|---|---|---|---|

| Category | Model | |||

| Human | Human | 84.7% | 83.6% | 85.7% |

| CU agents | ChatGPT Agent | 65.8% | 76.8% | 54.5% |

| Non-CU agents | OpenAI Deep Research | 36.0% | 60.7% | 10.9% |

| Non-CU agents | Google Deep Research | 23.4% | 42.9% | 3.6% |

| CU agents | OpenAI Operator | 23.4% | 33.9% | 12.7% |

| CU agents | Anthropic Computer Use | 14.4% | 19.6% | 9.1% |

| CU agents | Convergence AI Proxy | 12.6% | 16.1% | 9.1% |

| Non-CU agents | Grok3 DeepSearch | 11.7% | 21.4% | 1.8% |

| LLM baselines | DeepSeek R1 zero-shot | 8.1% | 10.7% | 5.5% |

| LLM baselines | Perplexity sonar-pro | 5.4% | 8.9% | 1.8% |

| LLM baselines | GPT-4o zero-shot | 2.7% | 5.4% | 0.0% |

| LLM baselines | DeepSeek R1 + Google Search | 1.8% | 3.6% | 0.0% |

| LLM baselines | GPT-4o + Google Search | 0.0% | 0.0% | 0.0% |

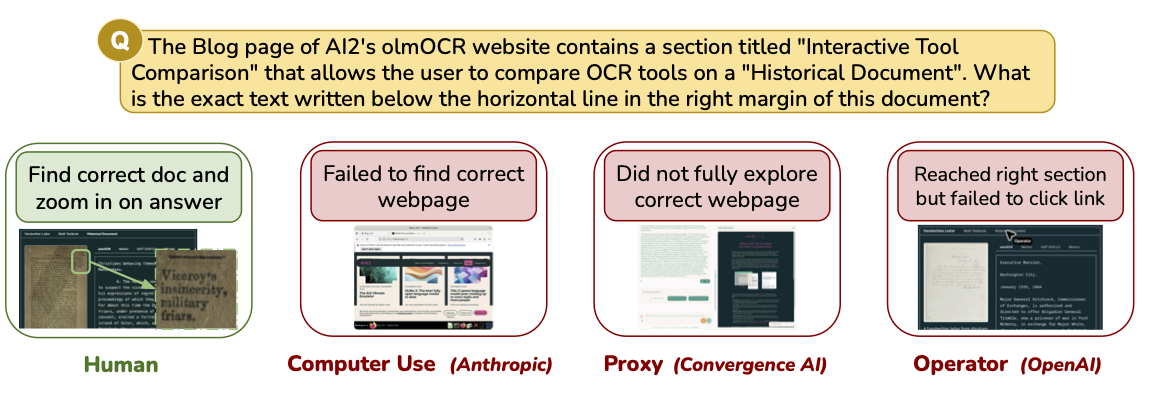

Example Question

Gold Answer Access & Usage Policy

To maintain responsible use of our evaluation data, we do not publicly release the gold answers associated with this project. Researchers interested in accessing the gold answers may request them via email at bearcubsteam@gmail.com. Access will be granted on a case-by-case basis for non-commercial research purposes only.

Please note:

- Redistribution or open-sourcing of the gold answers is strictly prohibited.

- By requesting access, you agree to use the answers solely for internal evaluation or research.

- Any use of the gold answers must cite our work appropriately

Team

Yixiao Song

Katherine Thai

Chau Minh Pham

Yapei Chang

Mazin Nadaf

Mohit Iyyer

Website last updated July 15, 2025